How the GPU works

How the GPU works - What the most expensive part of the PC is responsible for

Computer pricing always starts with the graphics card. Whether it is a simple office box or a monstrous gamer's starship, a small rectangle with fans makes up the lion's share of the price of the assembly. The fabulous price is doubly surprising when you remember that the computer can function without a graphics card. Triply strange that the video card only outputs the image to the monitor, which it is told by the processor. As always, it is not the number of tasks, but their complexity...

The design of a video card is deceptively complex

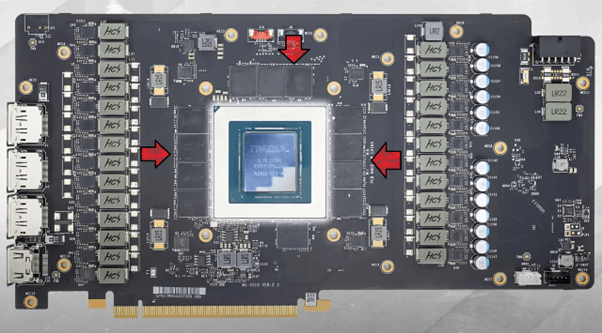

To simplify things completely, a video card is a textolite board that does not conduct electricity, and a chip in the middle. The board should not conduct electricity, because dozens of tracks are responsible for this. But the chip is not soldered into the board, and clumps on the substrate. The video card chip looks something like the CPU. The substrate and the chip become one unit thanks to the solder balls.

The chip consists of functional blocks: texture block - this block stretches texture on a 3D model; computing cores ALU - calculate lighting, glare and reflections; rasterization block - forms the image before displaying it on the monitor. To combine all the processes together and to supply voltage to the chip, you need a substrate. If the chip falls off the substrate, artifacts appear on the screen. The name of this artifact is chip failure.

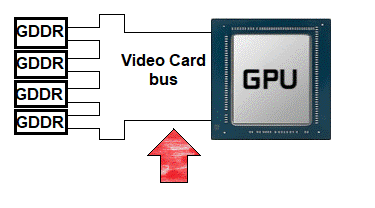

The video card gets its processing data from the processor and RAM. To store and manipulate the data, the graphics card needs video memory - VRAM.

The chips that girdle the GPU are called GDDRs, each chip can hold up to 2GB of memory. GDDRs are connected to the board in the same way as a chip: with solder balls. It takes some time before the data from the GDDR chips reaches the GPU. The video card bus is responsible for this process. It is not hard to remember: the wider the bus, the more data the video chip can process. The bus width is measured in bits, for example: 128, 256 and 512 bits.

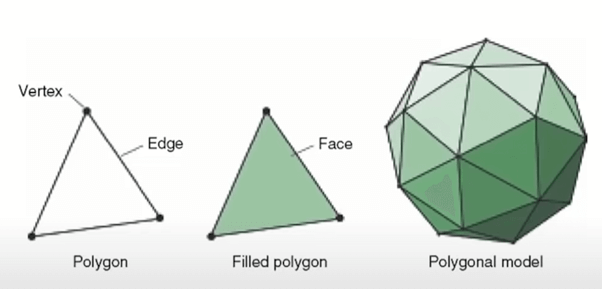

The principle of the video card at this stage may seem very similar to that of the processor and RAM. The key difference is that the most powerful processors have up to 16 cores, whereas a graphics card has thousands of them. When the processor is engaged in complex but narrowly focused tasks - geometry, artificial intelligence, etc. - the video card not only deals with tasks, but also stores information about them. Although the video card is somewhat inferior to the CPU in speed, but is engaged in a much larger number of tasks with large volumes. This is the purpose for which a video card needs thousands of cores. What are those tasks? These are the pixels on the screen, namely their location and sensitivity to light. Any graphic consists of tiny triangles that change color and become in the right place at the right time. Where these pixels move is decided by the processor, and the graphics card determines the "How".

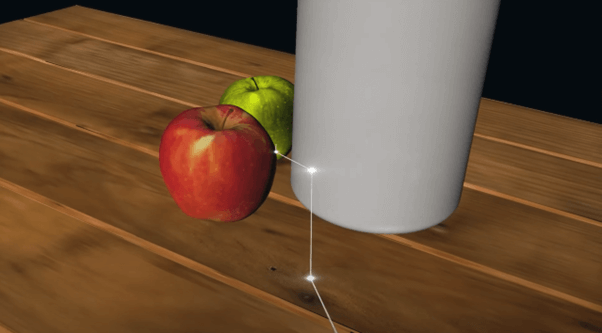

But the screenshot above is the model under the microscope. And here is an example of how the 3D model looks before interaction with the graphics card and after:

But to simplify the terminology altogether, the graphics card only pulls texture on top of the 3D model. The memory of the graphics card stores glare, reflections, effects, and how deeply light penetrates matter. For example, light drowns in Leon's leather sheepskin, while, conversely, it reflects off a metal shutter. Before 2018, the play of light was pre-programmed into games, scenes, and videos, but with the arrival of the Turing microarchitecture, all that has changed.

In 2018, Nvidia introduced a new technology to the world with RT cores. If you've all been wondering why RTX enabled doesn't transform graphics in games repeatedly, here's the answer: RTX doesn't increase the number of pixels on the screen, it calculates lighting in real time. That is, without RTX on, all lighting behaves according to a pre-written algorithm, but with RTX on, the computer reads data about all objects in real time from the user's eyes. What does it do? The picture gets deeper, but not prettier. Why did RTX steal my footage? Because each beam is an equation of refraction, absorption and reflection of light.

It's like a test at school. Which is easier: copying from a cheat sheet or learning everything by heart. But just as at the test, as in real life, there is no guarantee that "the right ticket will fall out" and you will not have to adapt to new conditions in a hurry.